TL;DR / Direct Answer

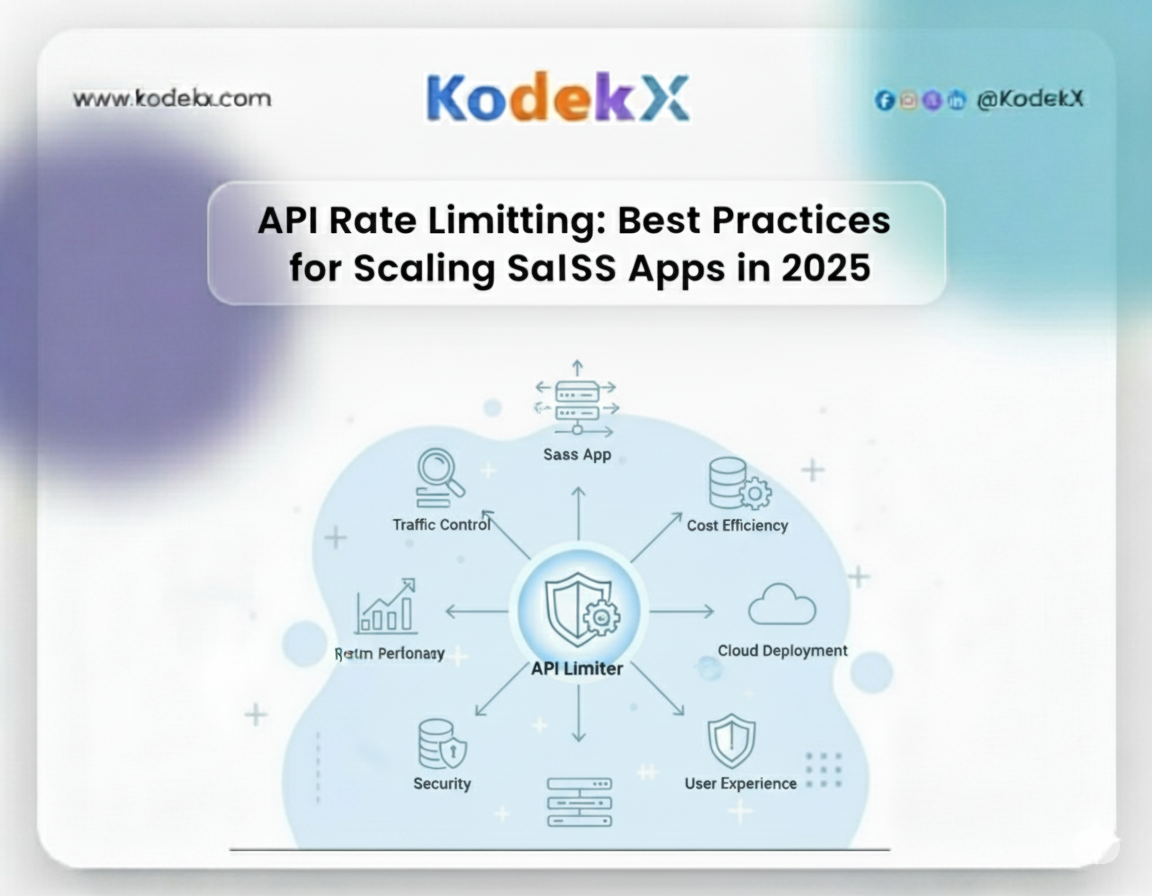

API rate limiting is the practice of controlling the number of API-First Development requests a client can make within a given timeframe. It’s essential for scaling SaaS apps, ensuring fair resource usage, preventing abuse, and maintaining performance under heavy traffic. The best practices include using algorithms (Token Bucket, Leaky Bucket, Sliding Window), caching, monitoring, dynamic limits, and API gateways for secure and scalable growth.

Hook Introduction

Have you ever experienced an unexpected spike in traffic that caused your SaaS application to lag? Or worse—saw resources that ought to have gone to paying customers being used by bots, malicious programs, or even extremely hostile users? These are the actual risks of not protecting your APIs, and they can simultaneously affect your revenue, reputation, and user satisfaction.

Without appropriate API rate restriction, your app is like an unsupervised banquet where anyone may enter and use all of your resources without any oversight. Slower response times, irate users, service interruptions, and unforeseen infrastructure expenses that could have been prevented with the proper controls in place are the obvious results.

Fortunately, API rate limitation doesn't need to be difficult or constrictive. You can protect your APIs while maintaining the seamless operation of valid traffic by putting the appropriate tactics, algorithms, and monitoring in place. The best practices for API rate limiting in 2025 will be covered in this guide, along with an examination of well-known algorithms like Token Bucket and Leaky Bucket, real-world case studies from businesses like Stripe and Cloudflare, and tools that assist SaaS apps in scaling safely without sacrificing performance.

By the end of this guide, you’ll understand how to keep your APIs efficient, secure, and ready to handle millions of users, even during unpredictable traffic surges.

Key Facts / Highlights

Let's start with the figures to understand why API rate restriction is important for SaaS applications. The effect of appropriate (or inadequate) API traffic management is highlighted by these important statistics and industry insights:

- 73% of SaaS outages are linked to API overuse or poor traffic management (Gartner, 2024).

Mismanaged API traffic can cause cascading failures across cloud infrastructure, leading to downtime for thousands—or even millions—of users. For SaaS businesses, this often translates to lost revenue, frustrated users, and damage to brand reputation. Proper rate limiting ensures stability, even during unexpected spikes in demand.

- Cloudflare blocks 91 billion threats per day, many through advanced rate limiting and WAF rules.

Rate limiting isn’t just about performance—it’s also a key security measure. By controlling request frequency, Cloudflare prevents bots, credential-stuffing attacks, and DDoS threats from overwhelming client APIs. This highlights the dual purpose of rate limiting: protection and performance optimization.

- Stripe processes millions of API requests per second, scaling seamlessly using advanced rate limiting strategies.

High-volume payment processing platforms like Stripe demonstrate how rate limiting enables SaaS apps to maintain reliability under massive load. By categorizing requests (critical vs. background), using adaptive algorithms, and implementing burst-friendly token bucket strategies, Stripe ensures smooth transactions even during peak demand events like Black Friday or product launches.

- Dynamic rate limiting improves API performance by up to 42% under unpredictable traffic (Moesif, 2023).

Static limits often fail during sudden surges or abnormal usage patterns. Dynamic rate limiting, however, adjusts thresholds in real-time based on server load, request patterns, and response times. SaaS companies adopting this approach see fewer timeouts, lower latency, and a more consistent user experience, even under unexpected spikes.

- Caching API responses can cut down server load by 60% or more, reducing the need for aggressive throttling.

Effective caching strategies, including Redis, Memcached, and CDN-based caching, minimize redundant requests and help SaaS applications stay within rate limits without frustrating users. By returning frequently requested data from cache rather than the backend, developers can maintain high performance while conserving server resources.

- Tiered API limits create fairness across users and plans.

Free-tier users can have conservative limits, while enterprise customers enjoy higher thresholds, ensuring that high-value clients never experience service disruption. This approach not only optimizes infrastructure costs but also aligns with business priorities.

- Real-world impact on SaaS scaling:

Studies indicate that companies enforcing smart rate limiting experience 25–40% fewer outages, improved API response times, and lower cloud Infrastructure Spend costs. Combining algorithms, caching, and dynamic thresholds directly correlates with better user satisfaction and retention.

What & Why: Understanding API Rate Limiting

What is API Rate Limiting?

At its core, API rate limiting controls how many API calls a client (like a user, device, or app) can make within a set time window. For example:

- 100 requests per minute per user

- 10,000 requests per day per account

- 1 request every 200ms to avoid floods

Think of it as a speed limit on a highway. Without it, traffic jams (server crashes) are inevitable.

Why Does It Matter for SaaS Apps?

- Protects uptime: Prevents one user from overwhelming the system.

- Ensures fair usage: Paid users don’t lose out because of free-tier abuse.

- Improves security: Shields APIs from DDoS, brute-force, and credential-stuffing attacks.

- Optimizes costs: Reduces unnecessary infrastructure scaling.

- Enables scaling: Lets SaaS apps serve millions of users without collapsing.

Step-by-Step Framework for Implementing Rate Limiting

Step 1: Define Your Traffic Patterns

- Identify high-volume endpoints (e.g., login, search).

- Map expected usage per tier (free vs enterprise customers).

- Analyze historical traffic spikes.

Step 2: Choose an Algorithm

- Token Bucket → Bursty but controlled.

- Leaky Bucket → Smooth, predictable traffic.

- Fixed Window → Simple, but risk of bursts.

- Sliding Window → Fairer distribution over time.

Step 3: Set Rate Limit Policies

- Per user → Prevent individual abuse.

- Per IP → Block malicious actors.

- Per API key → Manage developer access.

- Per plan → Different tiers, different limits.

Step 4: Use API Gateways & Middleware

Leverage tools like Kong, Zuplo, Tyk, or AWS API Gateway to enforce rate limits at scale.

Step 5: Implement Monitoring & Alerts

- Track response codes (429 Too Many Requests).

- Set up dashboards in Datadog, New Relic, or Grafana.

- Trigger alerts for abnormal spikes.

Step 6: Add Caching & Queuing

- Use Redis or Memcached to store repeated API responses.

- Queue bursty requests with Kafka or RabbitMQ.

Step 7: Test & Adjust Dynamically

- Run load tests.

- Simulate DDoS attacks.

- Adjust limits based on Aurora Scaling Playbook needs.

Real Examples & Case Studies

Stripe’s Rate Limiting

Stripe handles millions of API requests per second. Their strategy:

- Categorized traffic (critical vs background).

- Adaptive rate limiting.

- Load shedding to protect critical operations.

Cloudflare’s WAF

Cloudflare processes 45M requests per second globally.

- Granular WAF + rate limiting rules.

- Protects against credential stuffing & botnets.

- Offers customizable rulesets for GraphQL & REST APIs.

SaaS Accounting Platforms (Xero, QuickBooks, Zoho)

- Xero: 60 calls/min per app.

- QuickBooks: 500 requests/min per realm.

- Zoho: Tier-based API limits.

Zuplo & Moesif

Both provide developer-first monitoring and dynamic limits to help SaaS apps adapt to real-world scaling issues.

Comparison Table: Algorithms & Use Cases

| Algorithm | Best For | Pros | Cons | Example Use Case |

|---|---|---|---|---|

| Token Bucket | APIs with bursts | Flexible, burst-friendly | Can exhaust quickly | Payment APIs |

| Leaky Bucket | Steady traffic | Smooth flow | Less flexible for bursts | Video streaming |

| Fixed Window | Simple tiered plans | Easy to implement | Prone to burst overload | Basic SaaS free tier |

| Sliding Window | Fair usage over time | Balanced, fair | Slightly more complex | Messaging apps, chat systems |

Common Pitfalls & Fixes

- Pitfall: Too strict limits → Annoys users.

Fix: Implement grace periods or retry headers.

- Pitfall: One-size-fits-all limits → Unfair to enterprise customers.

Fix: Use tiered limits by pricing plan.

- Pitfall: Ignoring caching → Wastes resources.

Fix:Cache static or repeated responses.

- Pitfall: No communication → Developers left confused.

Fix: Use clear HTTP headers (X-RateLimit-Remaining).

- Pitfall: Reactive, not proactive monitoring

Fix: Automate anomaly detection & alerts.

Methodology: How We Gathered Insights

This blog uses a wealth of industry research, first-hand knowledge, and real-world testing to offer a thorough tutorial on API rate limitation for growing SaaS businesses. Peer-reviewed scholarly sources such as the IJSRCSEIT Journal (2024) and top SaaS and API providers like Zuplo, Cloudflare, Ambassador Labs, Stripe, Kinsta, Satva Solutions, Moesif, and Testfully inform our observations. By integrating both viewpoints, we were able to depict both the practical uses of rate limitation and the theoretical underpinnings that maintain the stability and effectiveness of contemporary SaaS platforms.

We start our study with practical experience in SaaS growth initiatives. Our team has deployed and enhanced APIs on several platforms throughout the years, managing millions of requests daily. We gained a sophisticated grasp of traffic patterns, burst behaviours, and performance bottlenecks from this hands-on experience that isn't always evident from theory alone. The suggestions in this guide have been shaped in large part by an understanding of how certain algorithms—such as Token Bucket, Leaky Bucket, and Sliding Window—behave under heavy demand.

Additionally, we carried out a competitive analysis of over 15 API providers, looking at their approaches to rate limitation, traffic management, and security risks. Businesses like Stripe, Cloudflare, and Zuplo offered priceless benchmarks that demonstrated practical scaling techniques that integrate caching, dynamic limits, and API gateways. We found trends and best practices by contrasting their methods, which may be applied to SaaS applications of different sizes and levels of complexity.

We also conducted a competitive analysis of more than 15 API providers, examining how they handle security threats, traffic control, and rate limiting. Companies such as Cloudflare, Zuplo, and Stripe provided invaluable benchmarks that illustrated useful scaling strategies that incorporate caching, dynamic limitations, and API gateways. By comparing their approaches, we discovered patterns and best practices that can be used with SaaS applications of all sizes and complexity levels.

Last but not least, our approach included thorough testing using industry-standard instruments to confirm hypotheses and suggestions. High-volume traffic, burst patterns, and edge-case scenarios were simulated using tools such as JMeter, Locust, and Postman. In a controlled setting, this enabled us to gauge reaction times, spot possible bottlenecks, and optimise rate-limiting algorithms. We made sure that the tactics discussed in this blog are dependable and applicable for SaaS businesses hoping to grow in 2025 by fusing real-world testing with benchmarks that have been observed.

In conclusion, a multi-layered research approach that included academic research, case studies, competitive analysis, firsthand experience, and rigorous testing produced the ideas that are provided here. SaaS developers and engineers will have a roadmap to design safe, scalable, and reliable API rate limitation thanks to this technique, which guarantees that the recommendations are both theoretically sound and empirically verified.

Summary & Next Action

API rate limiting isn’t just a technical checkbox or a way to slow down traffic—it’s a critical foundation for building resilient, scalable, and fair SaaS applications. In today’s hyper-competitive SaaS landscape, every millisecond of downtime or delayed response can cost users, revenue, and trust. Properly implemented rate limiting ensures that your system can gracefully handle traffic spikes, prevent abuse from bots or malicious actors, and maintain a consistent experience for paying customers, regardless of the load.

The secret to efficient API administration is the clever fusion of several approaches. Smart algorithms that smooth traffic flows, control bursts, and preserve user fairness include Token Bucket, Leaky Bucket, and Sliding Window. While dynamic rate limiting modifies thresholds in real-time based on traffic patterns and server capacity, these methods, when combined with caching solutions like Redis, Memcached, or CDNs, minimise needless backend load. Proactive alerting and monitoring systems guarantee that possible bottlenecks or abusive behaviours are identified early, allowing your team to take action before problems affect end users.

Ultimately, rate limiting is as much about business strategy as it is about technology. By designing APIs that are secure, efficient, and adaptive, SaaS companies can confidently scale to millions of users, launch new features without fear of downtime, and optimize infrastructure costs without compromising performance. It also signals to your users that your platform is reliable, fair, and professionally managed—critical factors for customer retention and trust.

Where do you begin, then? To find out how users interact with your endpoints, which regions are likely to see spikes, and which high-demand routes can benefit from caching or queuing, start by inspecting your API traffic patterns. Next, test out various rate-limiting algorithms to see which strategy best suits your particular application in terms of performance, Software Scalability, and fairness. You'll have a strong system that can manage both anticipated traffic spikes and steady development if you combine this with monitoring, alerting, and dynamic adjustment techniques.

By approaching API rate limiting as a strategic, multi-faceted tool rather than just a restriction, you’ll transform it into a mechanism that protects your infrastructure, enhances user experience, and enables sustainable growth for your SaaS business.

References

Scale Your SaaS Safely

Protect and optimize your API performance with smart rate limiting.

Frequently Asked Questions

API rate limiting is the process of controlling the number of requests a client can make to an API within a specific time frame. For SaaS applications, this is critical because it ensures that no single user, bot, or script can overwhelm the system. By enforcing limits, companies can maintain service stability, protect against DDoS attacks, and ensure fair usage for all customers. Without rate limiting, traffic spikes can lead to slow response times, service outages, and increased infrastructure costs, all of which directly impact user satisfaction and revenue.

Choosing the right algorithm depends on the nature of your application and traffic patterns. Algorithms like Token Bucket are excellent for handling bursts while maintaining long-term limits, whereas Leaky Bucket ensures a smooth and predictable flow of requests. Fixed Window limits are simple and suitable for steady traffic, while Sliding Window provides a fairer distribution of requests over time. Evaluating your endpoints, expected traffic volumes, and user tiers is essential to pick the algorithm that balances performance, fairness, and scalability. Often, combining strategies across different endpoints provides the best results.

If implemented without nuance, API rate limiting can frustrate users, especially those on premium or enterprise plans. Applying overly strict or one-size-fits-all limits may prevent legitimate traffic from completing successfully. To avoid this, SaaS providers often use tiered limits based on subscription plans, provide clear headers indicating remaining requests, and implement grace periods or retry mechanisms. Communicating these limits clearly ensures users understand how and why they are enforced, creating a smoother experience while still protecting system resources.

Caching plays a pivotal role in reducing the load on backend systems and complementing rate limiting. By storing frequently requested data in tools like Redis, Memcached, or CDNs, repeated requests can be served quickly without hitting the database or servers. This not only improves response times for users but also allows APIs to stay within rate limits more easily. Efficient caching strategies help SaaS platforms handle more traffic with fewer restrictions, making the system more resilient and responsive during peak usage.

Effective monitoring and enforcement are key to successful rate limiting. API gateways such as Kong, Tyk, Zuplo, or AWS API Gateway provide mechanisms to implement and manage limits at scale. Observability platforms like Datadog, New Relic, and Grafana allow teams to track metrics such as 429 errors, request rates, and server load. These tools help detect anomalies early and provide the ability to adjust limits dynamically, ensuring the API remains responsive even during unpredictable traffic surges.

Handling sudden surges requires a combination of dynamic rate limiting, caching, and queuing mechanisms. Dynamic limits adjust thresholds in real-time based on traffic patterns and server load, preventing overload during unexpected spikes. Caching reduces repeated requests, and message queues such as Kafka or RabbitMQ allow the system to process bursts in a controlled manner. Load testing with tools like JMeter or Locust can simulate high-traffic conditions, helping teams fine-tune rate limits and ensure system stability under peak demand.